1. Overview

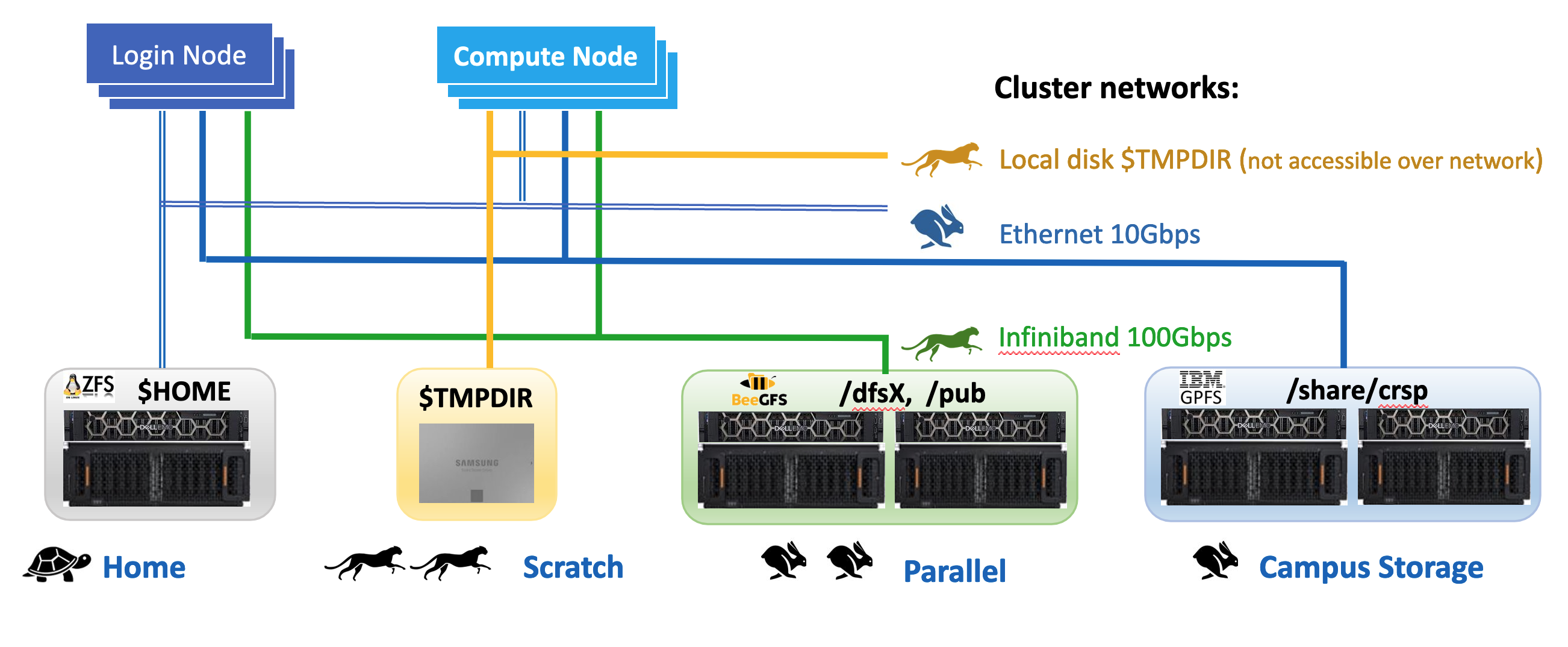

RCIC supports several different storage systems, each with their own sweet spot for price and performance. Connectivity, file System architecture, and physical hardware all contribute to the performance of HPC3 storage systems

All storage systems depicted below are available from the HPC3 cluster. CRSP (Campus Storage) is unique in that it can also be accessed from desktops and laptops without going through HPC3.

Fig. 1.1 HPC3 Storage pictogram

Attention

The following summary explains what each storage system provides, what it should be used for, and shows links for in-depth how to use guides:

Name |

Access |

Performance |

How to use |

|---|---|---|---|

On all nodes via NFS mount |

Slowest, yet is sufficient when used properly |

Store small files, compiled binaries, order of MBs data files. Not for data intensive batch jobs |

|

Local disk space unique to each compute node |

Fastest performance, data is removed when job completes |

As scratch storage ($TMPDIR) for batch jobs that repeatedly access many small files or make frequent small reads/writes. |

|

On all nodes via BeegFS mount |

Best for processing medium/ large data files (order of 100s MBs/GBs) |

To keep source code, binaries. For data used in batch jobs. Not for writing/reading many small files. |

|

CRSP (campus research storage pool) |

(1) On all nodes via NFS mount (2) From any campus IP or VPN-connected user laptop |

Best for processing medium/ large data files (order of 100s MBs/GBs) |

To keep source code, binaries. Sometimes for data used in batch jobs, usually better use DFS or Scratch. Not for writing/reading many small files. |

On all nodes via BeegFS mount |

Best for processing medium/ large data files (order of 100s MBs/GBs) |

To keep source code, binaries. For data used in batch jobs. Available to a handful of labs as a temporary storage. Not for writing/reading many small files. |

CRSP vs. DFS

The two available largest capacity storage systems are CRSP and DFS. Both are parallel filesystems but have different cost, availability, and usage models. The table below highlights the key differences and similarities between these two systems and can help you choose the right system (or combination of systems) to store your data.

Feature |

CRSP |

DFS |

|---|---|---|

Access |

(1) From any campus IP or VPN-connected laptop (2) From HPC3 |

Only from HPC3 |

Availability |

Highly-available. No routinely planned outages. Can survive many types of hardware failures without downtime |

Routine maintenance outage about 4X/year. Survives disk failures (RAID) only |

Backups |

Backed up daily offsite with 90 day retention of deleted/changed files |

|

Cost |

$50/TB/Year |

$100/TB/5Years |

Encryption at rest |

All data is encrypted at rest. |

Only dfs3b is encrypted at rest. |

File System |

IBM Storage Scale (aka GPFS) |

BeeGFS with Thinkparq support. Details |

Performance |

High-performance but DFS is a better match for direct use from HPC3 |

High-performance. Most common storage for used on HPC3 |

Quota Management |

Labs have a space and file number quotas. Users and groups can have sub-quotas set within the lab |

All users share the same group quota. All files must be written with the same unix group id to access quota space |

Snapshots |

Daily file system snapshots allow users to self-recover from deletions or overwrites of files |

No Snapshots |